The geothermal gradient varies with location and is typically measured by determining the bottom open-hole temperature after borehole drilling. To achieve accuracy the drilling fluid needs time to reach the ambient temperature. This is not always achievable for practical reasons.

In stable tectonic areas in the tropics a temperature-depth plot will converge to the annual average surface temperature. However, in areas where deep permafrost developed during the Pleistocene a low temperature anomaly can be observed that persists down to several hundred metres.[14] The Suwałki cold anomaly in Poland has led to the recognition that similar thermal disturbances related to Pleistocene-Holocene climatic changes are recorded in boreholes throughout Poland, as well as in Alaska, northern Canada, and Siberia.

In areas of Holocene uplift and erosion (Fig. 1) the initial gradient will be higher than the average until it reaches an inflection point where it reaches the stabilized heat-flow regime. If the gradient of the stabilized regime is projected above the inflection point to its intersect with present-day annual average temperature, the height of this intersect above present-day surface level gives a measure of the extent of Holocene uplift and erosion. In areas of Holocene subsidence and deposition (Fig. 2) the initial gradient will be lower than the average until it reaches an inflection point where it joins the stabilized heat-flow regime.

In deep boreholes, the temperature of the rock below the inflection point generally increases with depth at rates of the order of 20 K/km or more.[citation needed] Fourier's law of heat flow applied to the Earth gives q = Mg where q is the heat flux at a point on the Earth's surface, M the thermal conductivity of the rocks there, and g the measured geothermal gradient. A representative value for the thermal conductivity of granitic rocks is M = 3.0 W/mK. Hence, using the global average geothermal conducting gradient of 0.02 K/m we get that q = 0.06 W/m². This estimate, corroborated by thousands of observations of heat flow in boreholes all over the world, gives a global average of 6×10−2 W/m². Thus, if the geothermal heat flow rising through an acre of granite terrain could be efficiently captured, it would light four 60 watt light bulbs.

A variation in surface temperature induced by climate changes and the Milankovitch cycle can penetrate below the Earth's surface and produce an oscillation in the geothermal gradient with periods varying from daily to tens of thousands of years and an amplitude which decreases with depth and having a scale depth of several kilometers.[15][16] Melt water from the polar ice caps flowing along ocean bottoms tends to maintain a constant geothermal gradient throughout the Earth's surface.[15]

If that rate of temperature change were constant, temperatures deep in the Earth would soon reach the point where all known rocks would melt. We know, however, that the Earth's mantle is solid because it transmits S-waves. The temperature gradient dramatically decreases with depth for two reasons. First, radioactive heat production is concentrated within the crust of the Earth, and particularly within the upper part of the crust, as concentrations of uranium, thorium, and potassium are highest there: these three elements are the main producers of radioactive heat within the Earth. Second, the mechanism of thermal transport changes from conduction, as within the rigid tectonic plates, to convection, in the portion of Earth's mantle that convects. Despite its solidity, most of the Earth's mantle behaves over long time-scales as a fluid, and heat is transported by advection, or material transport. Thus, the geothermal gradient within the bulk of Earth's mantle is of the order of 0.3 kelvin per kilometer, and is determined by the adiabatic gradient associated with mantle material (peridotite in the upper mantle).

This heating up can be both beneficial or detrimental in terms of engineering: Geothermal energy can be used as a means for generating electricity, by using the heat of the surrounding layers of rock underground to heat water and then routing the steam from this process through a turbine connected to a generator.

On the other hand, drill bits have to be cooled not only because of the friction created by the process of drilling itself but also because of the heat of the surrounding rock at great depth. Very deep mines, like some gold mines in South Africa, need the air inside to be cooled and circulated to allow miners to work at such great depth.

Wiki

Tuesday, November 9, 2010

Geothermal

Wednesday, October 6, 2010

Fuel from Sewage

Sewage sludge could be used to make biodiesel fuel in a process that’s within a few percentage points of being cost-competitive with conventional fuel, a new report indicates.

A four percent reduction in the cost of making this alternative fuel would make it “competitive” with traditional petroleum-based diesel fuel, according to the author, David M. Kargbo of the U.S. Environmental Protection Agency.

However, he cautions that there are still “huge challenges” involved in reducing the price and in satisfying likely regulatory concerns. The findings by Kargbo, who is with the agency’s Region III Office of Innovation in Philadelphia, appear in Energy & Fuels, a journal of the American Chemical Society.

Traditional petroleum-based fuels are increasingly beset by environmental, political and supply concerns, so research into alternative fuels is gaining in popularity.

Conventional diesel fuel, like gasoline, is extracted from petroleum, or crude oil, and is used to power many trucks, boats, buses, and farm equipment. An alternative to conventional diesel is biodiesel, which is derived from alternative sources to crude oil, such as vegetable oil or animal fat. However, these sources are relatively expensive, and the higher prices have limited the use of biodiesel.

Kargbo argues that a cheaper alternative would be to make biodiesel from municipal sewage sludge, the solid material left behind from the treatment of sewage at wastewater treatment plants. The United States alone produces about seven million tons of sewage sludge yearly.

To boost biodiesel production, sewage treatment plants could would have to use microbes that produce higher amounts of oil than the microbes currently used for wastewater treatment, Kargbo said. That step alone, he added, could increase biodiesel production to the 10 billion gallon mark, which is more than triple the nation’s current biodiesel production capacity.

“Currently the estimated cost of production is $3.11 per gallon of biodiesel. To be competitive, this cost should be reduced to levels that are at or below [recent] petro diesel costs of $3.00 per gallon,” the report says.

However, the challenges that remain in both lowering this cost and in satisfying regulatory and environmental concerns remain “huge,” Kargbo wrote. Questions surround methods of collecting the sludge, separation of the biodiesel from other materials, maintaining biodiesel quality, and unwanted soap formation during production, and the removal of pharmaceutical contaminants from the sludge.

Nonetheless, “biodiesel production from sludge could be very profitable in the long run,” he added.

World Science

Comets

Comets may have come from other solar systems.

Many of the best known comets, including Halley, Hale-Bopp and McNaught, may have been born orbiting other stars, according to a new theory.

The proposal comes from a team of astronomers led by Hal Levison of the Southwest Research Institute in Boulder, Colo., who used computer simulations to show that the Sun may have captured small icy bodies from “sibling” stars when it was young.

Scientists believe the Sun formed in a cluster of hundreds of stars closely packed within a dense gas cloud. Each star would have formed many small icy bodies, Levison and colleagues say—comets. These would have arisen from the same disk-shaped zone of gas and dust, surrounding each star, from which planets formed.

Most of these comets were slung out of these fledgling planetary systems due to gravitational interactions with newly forming giant planets, the theory goes. The comets would then have become tiny, free-floating members of the cluster.

The Sun’s cluster came to a violent end, however, when its gas was blown out by the hottest young stars, according to Levison and colleagues. The new models show that the Sun then gravitationally captured a large cloud of comets as the cluster dispersed.

“When it was young, the Sun shared a lot of spit with its siblings, and we can see that stuff today,” said Levison, whose research is published in the June 10 advance online issue of the research journal Proceedings of the National Academy of Sciences.

“The process of capture is surprisingly efficient and leads to the exciting possibility that the cloud contains a potpourri that samples material from a large number of stellar siblings of the Sun,” added Martin Duncan of Queen’s University, Canada, a co-author of the study.

The team cites as evidence a bubble-shaped region of comets, known as the Oort cloud, that surrounds the Sun, extending halfway to the nearest star. It has been commonly assumed this cloud formed from the Sun’s proto-planetary disk, the structure from which planets formed. But because detailed models show that comets from the solar system produce a much more anemic cloud than observed, another source is needed, Levison’s group contends.

“More than 90 percent of the observed Oort cloud comets [must] have an extra-solar origin,” assuming the Sun’s proto-planetary disk can be used to estimate the Oort Cloud’s indigenous population, Levison said.

World Science

Solar System

Solar system’s distant ice-rocks come into focus

Beyond where Neptune—officially our solar system’s furthest planet—circles the Sun, there float countless faint, icy rocks.

They’re called trans-Neptunian objects, and one of the biggest is Pluto—once classified as a planet, but now designated as a “dwarf planet.” This region also supplies us with comets such as famous Comet Halley.

Now, astronomers using new techniques to cull the data archives of NASA’s Hubble Space Telescope have added 14 new trans-Neptunian objects to the known catalog. Their method, they say, promises to turn up hundreds more.

“Trans-Neptunian objects interest us because they are building blocks left over from the formation of the solar system,” said Cesar Fuentes, formerly with the Harvard-Smithsonian Center for Astrophysics and now at Northern Arizona University. He is the lead author of a paper on the findings, to appear in The Astrophysical Journal.

As trans-Neptunian objects, or TNOs, slowly orbit the sun, they move against the starry background, appearing as streaks of light in time exposure photographs. The team developed software to scan hundreds of Hubble images for such streaks. After promising candidates were flagged, the images were visually examined to confirm or refute each discovery.

Most TNOs are located near the ecliptic—a line in the sky marking the plane of the solar system, an outgrowth of the fact that the solar system formed from a disk of material, astronomers say. Therefore, the researchers searched for objects near the ecliptic.

They found 14 bodies, including one “binary,” that is, a pair whose members orbit each other. All were more than 100 million times fainter than objects visible to the unaided eye. By measuring their motion across the sky, astronomers calculated an orbit and distance for each object. Combining the distance, brightness and an estimated reflectivity allowed them to calculate the approximate size. The newfound TNOs range in size from an estimated 25 to 60 miles (40-100 km) across.

Unlike planets, which tend to orbit very near the ecliptic, some TNOs have orbits quite tilted from that line. The team examined the size distribution of objects with both types of orbits to gain clues about how the population has evolved over the past 4.5 billion years.

Most smaller TNO’s are thought to be shattered remains of bigger ones. Over billions of years, these objects smack together, grinding each other down. The team found that the size distribution of TNOs with flat versus tilted orbits is about the same as objects get fainter and smaller. Therefore, both populations have similar collisional histories, the researchers said.

The study examined only one-third of a square degree of the sky, so there’s much more area to survey. Hundreds of additional TNOs may lurk in the Hubble archives at higher ecliptic latitudes, said Fuentes and his colleagues, who plan to continue their search. “We have proven our ability to detect and characterize TNOs even with data intended for completely different purposes,” Fuentes said.

World Science

Tuesday, September 14, 2010

Thermosetting Polymer

A thermosetting plastic, also known as a thermoset, is polymer material that irreversibly cures. The cure may be done through heat (generally above 200 °C (392 °F)), through a chemical reaction (two-part epoxy, for example), or irradiation such as electron beam processing.

Thermoset materials are usually liquid or malleable prior to curing and designed to be molded into their final form, or used as adhesives. Others are solids like that of the molding compound used in semiconductors and integrated circuits (IC's).

According to IUPAC recommendation: A thermosetting polymer is a prepolymer in a soft solid or viscous state that changes irreversibly into an infusible, insoluble polymer network by curing. Curing can be induced by the action of heat or suitable radiation, or both. A cured thermosetting polymer is called a thermoset

Process

The curing process transforms the resin into a plastic or rubber by a cross-linking process. Energy and/or catalysts are added that cause the molecular chains to react at chemically active sites (unsaturated or epoxy sites, for example), linking into a rigid, 3-D structure. The cross-linking process forms a molecule with a larger molecular weight, resulting in a material with a higher melting point. During the reaction, the molecular weight has increased to a point so that the melting point is higher than the surrounding ambient temperature, the material forms into a solid material.

Uncontrolled reheating of the material results in reaching the decomposition temperature before the melting point is obtained. Therefore, a thermoset material cannot be melted and re-shaped after it is cured. This implies that thermosets cannot be recycled, except as filler material.

Wiki

Failure Analysis

Failure analysis is the process of collecting and analyzing data to determine the cause of a failure. It is an important discipline in many branches of manufacturing industry, such as the electronics industry, where it is a vital tool used in the development of new products and for the improvement of existing products. It relies on collecting failed components for subsequent examination of the cause or causes of failure using a wide array of methods, especially microscopy and spectroscopy. The NDT or nondestructive testing methods are valuable because the failed products are unaffected by analysis, so inspection always starts using these methods.

Forensic investigation

Forensic inquiry into the failed process or product is the starting point of failure analysis. Such inquiry is conducted using scientific analytical methods such as electrical and mechanical measurements, or by analysing failure data such as product reject reports or examples of previous failures of the same kind. The methods of forensic engineering are especially valuable in tracing product defects and flaws. They may include fatigue cracks, brittle cracks produced by stress corrosion cracking or environmental stress cracking for example. Witness statements can be valuable for reconstructing the likely sequence of events and hence the chain of cause and effect. Human factors can also be assessed when the cause of the failure is determined. There are several useful methods to prevent product failures occurring in the first place, including FMEA and FTA, methods which can be used during prototyping to analyse failures before a product is marketed.

Failure theories can only be constructed on such data, but when corrective action is needed quickly, the precautionary principle demands that measures be put in place. In aircraft accidents for example, all planes of the type involved can be grounded immediately pending the outcome of the inquiry.

Another aspect of failure analysis is associated with No Fault Found (NFF) which is a term used in the field of failure analysis to describe a situation where an originally reported mode of failure can't be duplicated by the evaluating technician and therefore the potential defect can't be fixed.

NFF can be attributed to oxidation, defective connections of electrical components, temporary shorts or opens in the circuits, software bugs, temporary environmental factors, but also to the operator error. Large number of devices that are reported as NFF during the first troubleshooting session often return to the failure analysis lab with the same NFF symptoms or a permanent mode of failure.

The term Failure analysis also applies to other fields such as business management and military strategy.

Methods of Analysis

The failure analysis of many different products involves the use of the following tools and techniques:

Microscopes

Optical microscope

Liquid crystal

Scanning acoustic microscope (SAM)

Scanning Acoustic Tomography (SCAT)

Atomic Force Microscope (AFM)

Stereomicroscope

Photo emission microscope (PEM)

X-ray microscope

Infra-red microscope

Scanning SQUID microscope

Sample Preparation

Jet-etcher

Plasma etcher

Back Side Thinning Tools

Mechanical Back Side Thinning

Laser Chemical Back Side Etching

Spectroscopic Analysis

Transmission line pulse spectroscopy (TLPS)

Auger electron spectroscopy

Deep Level Transient Spectroscopy (DLTS)

Wiki

Polymorphism

Polymorphism in materials science is the ability of a solid material to exist in more than one form or crystal structure. Polymorphism can potentially be found in any crystalline material including polymers, minerals, and metals, and is related to allotropy, which refers to elemental solids. The complete morphology of a material is described by polymorphism and other variables such as crystal habit, amorphous fraction or crystallographic defects. Polymorphism is relevant to the fields of pharmaceuticals, agrochemicals, pigments, dyestuffs, foods, and explosives.

When polymorphism exists as a result of difference in crystal packing, it is called packing polymorphism. Polymorphism can also result from the existence of different conformers of the same molecule in conformational polymorphism. In pseudopolymorphism the different crystal types are the result of hydration or solvation. An example of an organic polymorph is glycine, which is able to form monoclinic and hexagonal crystals. Silica is known to form many polymorphs, the most important of which are; α-quartz, β-quartz, tridymite, cristobalite, coesite, and stishovite.

An analogous phenomenon for amorphous materials is polyamorphism, when a substance can take on several different amorphous modifications.

Polymorphism is important in the development of pharmaceutical ingredients. Many drugs receive regulatory approval for only a single crystal form or polymorph. In a classic patent case the pharmaceutical company GlaxoSmithKline defended its patent for the polymorph type II of the active ingredient in Zantac against competitors while that of the polymorph type I had already expired. Polymorphism in drugs can also have direct medical implications. Medicine is often administered orally as a crystalline solid and dissolution rates depend on the exact crystal form of a polymorph.

Cefdinir is a drug appearing in 11 patents from 5 pharmaceutical companies in which a total of 5 different polymorphs are described. The original inventor Fujisawa now Astellas (with US partner Abbott) extended the original patent covering a suspension with a new anhydrous formulation. Competitors in turn patented hydrates of the drug with varying water content, which were described with only basic techniques such as infrared spectroscopy and XRPD, a practice criticised by in one review because these techniques at the most suggest a different crystal structure but are unable to specify one. These techniques also tend to overlook chemical impurities or even co-components. Abbott researchers realised this the hard way when, in one patent application, it was ignored that their new cefdinir crystal form was, in fact, that of a pyridinium salt. The review also questioned whether the polymorphs offered any advantages to the existing drug: something clearly demanded in a new patent.

Acetylsalicylic acid elusive 2nd polymorph was first discovered by Vishweshwar et al. fine structural details were given by Bond et al. A new crystal type was found after attempted co-crystallization of aspirin and levetiracetam from hot acetonitrile. The form II is stable only at 100 K and reverts back to form I at ambient temperature. In the (unambiguous) form I, two salicylic molecules form centrosymmetric dimers through the acetyl groups with the (acidic) methyl proton to carbonyl hydrogen bonds, and, in the newly-claimed form II, each salicylic molecule forms the same hydrogen bonds, but then with two neighbouring molecules instead of one. With respect to the hydrogen bonds formed by the carboxylic acid groups, both polymorphs form identical dimer structures.

Paracetamol powder has poor compression properties:

this poses difficulty in making tablets, so a new polymorph of paracetamol is discovered which is more compressible.

due to differences in solubility of polymorphs, one polymorph may be more active therapeutically than another polymorph of same drug

cortisone acetate exists in at least five different polymorphs, four of which are unstable in water and change to a stable form.

carbamazepine(used in epilepsy and trigeminal neuralgia) beta-polymorph developed from solvent of high dielectric constant ex aliphatic alcohol, whereas alpha polymorph crystallized from solvents of low dielectric constant such as carbon tetrachloride

estrogen and chloroamphenicol also show polymorphism

Wiki

Tuesday, August 31, 2010

Engineering Geology

Engineering Geology is the application of the geologic sciences to engineering practice for the purpose of assuring that the geologic factors affecting the location, design, construction, operation and maintenance of engineering works are recognized and adequately provided for. Engineering geologists investigate and provide geologic and geotechnical recommendations, analysis, and design associated with human development. The realm of the engineering geologist is essentially in the area of earth-structure interactions, or investigation of how the earth or earth processes impact human made structures and human activities.

Engineering geologic studies may be performed during the planning, environmental impact analysis, civil or structural engineering design, value engineering and construction phases of public and private works projects, and during post-construction and forensic phases of projects. Works completed by engineering geologists include; geologic hazards, geotechnical, material properties, landslide and slope stability, erosion, flooding, dewatering, and seismic investigations, etc. Engineering geologic studies are performed by a geologist or engineering geologist that is educated, trained and has obtained experience related to the recognition and interpretation of natural processes, the understanding of how these processes impact man-made structures (and vice versa), and knowledge of methods by which to mitigate for hazards resulting from adverse natural or man-made conditions. The principal objective of the engineering geologist is the protection of life and property against damage caused by geologic conditions.

Engineering geologic practice is also closely related to the practice of geological engineering, geotechnical engineering, soils engineering, environmental geology and economic geology. If there is a difference in the content of the disciplines described, it mainly lies in the training or experience of the practitioner

Wiki

Monday, August 30, 2010

Sedimentary Rock

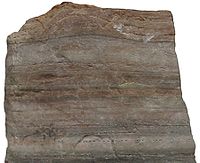

Sedimentary rock is a type of rock that is formed by sedimentation of material at the Earth's surface and within bodies of water. Sedimentation is the collective name for processes that cause mineral and/or organic particles (detritus) to settle and accumulate or minerals to precipitate from a solution. Particles that form a sedimentary rock by accumulating are called sediment. Before being deposited, sediment was formed by weathering and erosion in a source area, and then transported to the place of deposition by water, wind, mass movement or glaciers which are called agents of denudation.

The sedimentary rock cover of the continents of the Earth's crust is extensive, but the total contribution of sedimentary rocks is estimated to be only 5% of the total volume of the crust. Sedimentary rocks are only a thin veneer over a crust consisting mainly of igneous and metamorphic rocks.

Sedimentary rocks are deposited in layers as strata, forming a structure called bedding. The study of sedimentary rocks and rock strata provides information about the subsurface that is useful for civil engineering, for example in the construction of roads, houses, tunnels, canals or other constructions. Sedimentary rocks are also important sources of natural resources like coal, fossil fuels, drinking water or ores.

The study of the sequence of sedimentary rock strata is the main source for scientific knowledge about the Earth's history, including palaeogeography, paleoclimatology and the history of life.

The scientific discipline that studies the properties and origin of sedimentary rocks is called sedimentology. Sedimentology is both part of geology and physical geography and overlaps partly with other disciplines in the Earth sciences, such as pedology, geomorphology, geochemistry or structural geology.

Classification

Clastic

Clastic sedimentary rocks are composed of discrete fragments or clasts of materials derived from other minerals. They are composed largely of quartz with other common minerals including feldspar, amphiboles, clay minerals, and sometimes more exotic igneous and metamorphic minerals.

Clastic sedimentary rocks, such as limestone or sandstone, were formed from rocks that have been broken down into fragments by weathering, which then have been transported and deposited elsewhere.

Clastic sedimentary rocks may be regarded as falling along a scale of grain size, with shale being the finest with particles less than 0.002 mm, siltstone being a little bigger with particles between 0.002 to 0.063 mm, and sandstone being coarser still with grains 0.063 to 2 mm, and conglomerates and breccias being more coarse with grains 2 to 263 mm. Breccia has sharper particles, while conglomerate is categorized by its rounded particles. Particles bigger than 263 mm are termed blocks (angular) or boulders (rounded). Lutite, Arenite and Rudite are general terms for sedimentary rock with clay/silt-, sand- or conglomerate/breccia-sized particles.

The classification of clastic sedimentary rocks is complex because there are many variables involved. Particle size (both the average size and range of sizes of the particles), composition of the particles (in sandstones, this includes quartz arenites, arkoses, and lithic sandstones), the cement, and the matrix (the name given to the smaller particles present in the spaces between larger grains) must all be taken into consideration.

Shales, which consist mostly of clay minerals, are generally further classified on the basis of composition and bedding. Coarser clastic sedimentary rocks are classified according to their particle size and composition. Orthoquartzite is a very pure quartz sandstone; arkose is a sandstone with quartz and abundant feldspar; greywacke is a sandstone with quartz, clay, feldspar, and metamorphic rock fragments present, which was formed from the sediments carried by turbidity currents.

All rocks disintegrate when exposed to mechanical and chemical weathering at the Earth's surface.

Lower Antelope Canyon was carved out of the surrounding sandstone by both mechanical weathering and chemical weathering. Wind, sand, and water from flash flooding are the primary weathering agents.Mechanical weathering is the breakdown of rock into particles without producing changes in the chemical composition of the minerals in the rock. Ice is the most important agent of mechanical weathering. Water percolates into cracks and fissures within the rock, freezes, and expands. The force exerted by the expansion is sufficient to widen cracks and break off pieces of rock. Heating and cooling of the rock, and the resulting expansion and contraction, also aids the process. Mechanical weathering contributes further to the breakdown of rock by increasing the surface area exposed to chemical agents.

Chemical weathering is the breakdown of rock by chemical reaction. In this process the minerals within the rock are changed into particles that can be easily carried away. Air and water are both involved in many complex chemical reactions. The minerals in igneous rocks may be unstable under normal atmospheric conditions, those formed at higher temperatures being more readily attacked than those formed at lower temperatures. Igneous rocks are commonly attacked by water, particularly acid or alkaline solutions, and all of the common igneous rock forming minerals (with the exception of quartz, which is very resistant) are changed in this way into clay minerals and chemicals in solution.

Rock particles in the form of clay, silt, sand, and gravel are transported by the agents of erosion (usually water, and less frequently, ice and wind) to new locations and redeposited in layers, generally at a lower elevation.

These agents reduce the size of the particles, sort them by size, and then deposit them in new locations. The sediments dropped by streams and rivers form alluvial fans, flood plains, deltas, and on the bottom of lakes and the sea floor. The wind may move large amounts of sand and other smaller particles. Glaciers transport and deposit great quantities of usually unsorted rock material as till.

These deposited particles eventually become compacted and cemented together, forming clastic sedimentary rocks. Such rocks contain inert minerals that resist mechanical and chemical breakdown, such as quartz. Quartz is one of the most mechanically and chemically resistant minerals. Highly weathered sediments can contain several heavy and stable minerals, best illustrated by the ZTR index.

Organic

Organic sedimentary rocks contain materials generated by living organisms, and include carbonate minerals created by organisms, such as corals, mollusks, and foraminifera, which cover the ocean floor with layers of calcium carbonate, which can later form limestone. Other examples include stromatolites, the flint nodules found in chalk (which is itself a biochemical sedimentary rock, a form of limestone), and coal and oil shale (derived from the remains of tropical plants and subjected to heat).

Chemical

Chemical sedimentary rocks form when minerals in solution become supersaturated and precipitate. In marine environments, this is a method for the formation of limestone. Another common environment in which chemical sedimentary rocks form is a body of water that is evaporating. Evaporation decreases the amount of water without decreasing the amount of dissolved material. Therefore, the dissolved material can become oversaturated and precipitate. Sedimentary rocks from this process can include the evaporite minerals halite (rock salt), sylvite, barite and gypsum.

Wiki

Metamorphic Rock

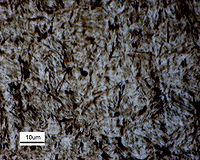

Metamorphic rock is the result of the transformation of an existing rock type, the protolith, in a process called metamorphism, which means "change in form". The protolith is subjected to heat and pressure (temperatures greater than 150 to 200 °C and pressures of 1500 bars[1]) causing profound physical and/or chemical change. The protolith may be sedimentary rock, igneous rock or another older metamorphic rock. Metamorphic rocks make up a large part of the Earth's crust and are classified by texture and by chemical and mineral assemblage (metamorphic facies). They may be formed simply by being deep beneath the Earth's surface, subjected to high temperatures and the great pressure of the rock layers above it. They can form from tectonic processes such as continental collisions, which cause horizontal pressure, friction and distortion. They are also formed when rock is heated up by the intrusion of hot molten rock called magma from the Earth's interior. The study of metamorphic rocks (now exposed at the Earth's surface following erosion and uplift) provides us with information about the temperatures and pressures that occur at great depths within the Earth's crust

Metamorphic minerals

Metamorphic minerals are those that form only at the high temperatures and pressures associated with the process of metamorphism. These minerals, known as index minerals, include sillimanite, kyanite, staurolite, andalusite, and some garnet.

Other minerals, such as olivines, pyroxenes, amphiboles, micas, feldspars, and quartz, may be found in metamorphic rocks, but are not necessarily the result of the process of metamorphism. These minerals formed during the crystallization of igneous rocks. They are stable at high temperatures and pressures and may remain chemically unchanged during the metamorphic process. However, all minerals are stable only within certain limits, and the presence of some minerals in metamorphic rocks indicates the approximate temperatures and pressures at which they formed.

The change in the particle size of the rock during the process of metamorphism is called recrystallization. For instance, the small calcite crystals in the sedimentary rock limestone change into larger crystals in the metamorphic rock marble, or in metamorphosed sandstone, recrystallization of the original quartz sand grains results in very compact quartzite, in which the often larger quartz crystals are interlocked. Both high temperatures and pressures contribute to recrystallization. High temperatures allow the atoms and ions in solid crystals to migrate, thus reorganizing the crystals, while high pressures cause solution of the crystals within the rock at their point of contact.

Foliation

Folded foliation in a metamorphic rock from near Geirangerfjord, NorwayThe layering within metamorphic rocks is called foliation (derived from the Latin word folia, meaning "leaves"), and it occurs when a rock is being shortened along one axis during recrystallization. This causes the platy or elongated crystals of minerals, such as mica and chlorite, to become rotated such that their long axes are perpendicular to the orientation of shortening. This results in a banded, or foliated, rock, with the bands showing the colors of the minerals that formed them.

Textures are separated into foliated and non-foliated categories. Foliated rock is a product of differential stress that deforms the rock in one plane, sometimes creating a plane of cleavage. For example, slate is a foliated metamorphic rock, originating from shale. Non-foliated rock does not have planar patterns of strain.

Rocks that were subjected to uniform pressure from all sides, or those that lack minerals with distinctive growth habits, will not be foliated. Slate is an example of a very fine-grained, foliated metamorphic rock, while phyllite is medium, schist coarse, and gneiss very coarse-grained. Marble is generally not foliated, which allows its use as a material for sculpture and architecture.

Another important mechanism of metamorphism is that of chemical reactions that occur between minerals without them melting. In the process atoms are exchanged between the minerals, and thus new minerals are formed. Many complex high-temperature reactions may take place, and each mineral assemblage produced provides us with a clue as to the temperatures and pressures at the time of metamorphism.

Metasomatism is the drastic change in the bulk chemical composition of a rock that often occurs during the processes of metamorphism. It is due to the introduction of chemicals from other surrounding rocks. Water may transport these chemicals rapidly over great distances. Because of the role played by water, metamorphic rocks generally contain many elements absent from the original rock, and lack some that originally were present. Still, the introduction of new chemicals is not necessary for recrystallization to occur.

Wiki

Closed-Circuit Television

Closed-circuit television (CCTV) is the use of video cameras to transmit a signal to a specific place, on a limited set of monitors.

It differs from broadcast television in that the signal is not openly transmitted, though it may employ point to point (P2P), point to multipoint, or mesh wireless links. CCTV is often used for surveillance in areas that may need monitoring such as banks, casinos, airports, military installations, and convenience stores. It is also an important tool for distance education

In industrial plants, CCTV equipment may be used to observe parts of a process from a central control room, for example when the environment is not suitable for humans. CCTV systems may operate continuously or only as required to monitor a particular event. A more advanced form of CCTV, utilizing Digital Video Recorders (DVRs), provides recording for possibly many years, with a variety of quality and performance options and extra features (such as motion-detection and email alerts). More recently, decentralized IP-based CCTV cameras, some equipped with megapixel sensors, support recording directly to network-attached storage devices, or internal flash for completely stand-alone operation.

Surveillance of the public using CCTV is particularly common in the UK, where there are reportedly more cameras per person than in any other country in the world.

There and elsewhere, its increasing use has triggered a debate about security versus privacy.

The first closed-circuit television cameras used in public spaces were crude, conspicuous, low definition black and white systems without the ability to zoom or pan. Modern CCTV cameras use small high definition colour cameras that can not only focus to resolve minute detail, but by linking the control of the cameras to a computer, objects can be tracked semi-automatically. The technology that enable this is often referred to as Video Content Analysis (VCA), and is currently being developed by a large number of technological companies around the world. The current technology enable the systems to recognize if a moving object is a walking person, a crawling person or a vehicle. It can also determine the color of the object. NEC claim to have a system that can identify a person's age by evaluating a picture of him/her. Other technologies claim to be able to identify people by their biometrics.

CCTV monitoring station run by the West Yorkshire Police at the Elland Road football ground in LeedsThe system identifies where a person is, how he is moving and whether he is a person or for instance a car. Based on this information the system developers implement features such as blurring faces or "virtual walls" that block the sight of a camera where it is not allowed to film. It is also possible to provide the system with rules, such as for example "sound the alarm whenever a person is walking close to that fence" or in a museum "set off an alarm if a painting is taken down from the wall".

VCA can also be used for forensics after the film has been made. It is then possible to search for certain actions within the recorded video. For example if you know a criminal is driving a yellow car, you can set the system to search for yellow cars and the system will provide you with a list of all the times where there is a yellow car visible in the picture. These conditions can be made more precise by searching for "a person moving around in a certain area for a suspicious amount of time", for example if someone is standing around an ATM machine without using it.

Surveillance camera outside a McDonalds highway drive-inMaintenance of CCTV systems is important in case forensic examination is necessary after a crime has been committed.

In crowds the system is limited to finding anomalies, for instance a person moving in the opposite direction to the crowd, which might be a case in airports where passengers are only supposed to walk in one direction out of a plane, or in a subway where people are not supposed to exit through the entrances.[citation needed]

VCA also has the ability to track people on a map by calculating their position from the images. It is then possible to link many cameras and track a person through an entire building or area. This can allow a person to be followed without having to analyze many hours of film. Currently the cameras have difficulty identifying individuals from video alone, but if connected to a key-card system, identities can be established and displayed as a tag over their heads on the video.

Monitoring station of a small office buildingThere is also a significant difference in where the VCA technology is placed, either the data is being processed within the cameras (on the edge) or by a centralized server. Both technologies have their pros and cons.

The implementation of automatic number plate recognition produces a potential source of information on the location of persons or groups.

There is no technological limitation preventing a network of such cameras from tracking the movement of individuals. Reports have also been made of plate recognition misreading numbers leading to the billing of the entirely wrong person.[37] In the UK, car cloning is a crime where, by altering, defacing or replacing their number plates with stolen ones, perpetrators attempt to avoid speeding and congestion charge fines and even to steal petrol from garage forecourts.

CCTV critics see the most disturbing extension to this technology as the recognition of faces from high-definition CCTV images.[citation needed] This could determine a person's identity without alerting him that his identity is being checked and logged. The systems can check many thousands of faces in a database in under a second.

The combination of CCTV and facial recognition has been tried as a form of mass surveillance, but has been ineffective because of the low discriminating power of facial recognition technology and the very high number of false positives generated. This type of system has been proposed to compare faces at airports and seaports with those of suspected terrorists or other undesirable entrants.

Eye-in-the-sky surveillance dome camera watching from a high steel poleComputerized monitoring of CCTV images is under development, so that a human CCTV operator does not have to endlessly look at all the screens, allowing an operator to observe many more CCTV cameras. These systems do not observe people directly. Instead they track their behaviour by looking for particular types of body movement behavior, or particular types of clothing or baggage.

The theory behind this is that in public spaces people behave in predictable ways. People who are not part of the 'crowd', for example car thieves, do not behave in the same way. The computer can identify their movements, and alert the operator that they are acting out of the ordinary. Recently in the latter part of 2006, news reports on UK television brought to light newly developed technology that uses microphones[clarification needed] in conjunction with CCTV.

If a person is observed to be shouting in an aggressive manner (e.g., provoking a fight), the camera can automatically zoom in and pinpoint the individual and alert a camera operator. Of course this then lead to the discussion that the technology can also be used to eavesdrop and record private conversations from a reasonable distance (e.g., 100 metres or about 330 feet).

The same type of system can track identified individuals as they move through the area covered by CCTV. Such applications have been introduced in the early 2000s, mainly in the USA, France, Israel and Australia.[citation needed] With software tools, the system is able to develop three-dimensional models of an area, and to track and monitor the movement of objects within it.

To many, the development of CCTV in public areas, linked to computer databases of people's pictures and identity, presents a serious breach of civil liberties. Critics fear the possibility that one would not be able to meet anonymously in a public place or drive and walk anonymously around a city.[citation needed] Demonstrations or assemblies in public places could be affected as the state would be able to collate lists of those leading them, taking part, or even just talking with protesters in the street.

Retention, storage and preservation

The long-term storage and archiving of CCTV recordings is an issue of concern in the implementation of a CCTV system. Re-usable media such as tape may be cycled through the recording process at regular intervals. There are statutory limits on retention of data.

Recordings are kept for several purposes. Firstly, the primary purpose for which they were created (e.g. to monitor a facility). Secondly, they need to be preserved for a reasonable amount of time to recover any evidence of other important activity they might document (e.g. a group of people passing a facility the night a crime was committed). Finally, the recordings may be evaluated for historical, research or other long-term information of value they may contain (e.g. samples kept to help understand trends for a business or community).

Recordings are more commonly stored using hard disk drives in lieu of video cassette recorders. The quality of digital recordings are subject to compression ratios, images stored per second, image size and duration of image retention before being overwritten. Different vendors of digital video recorders use different compression standards and varying compression ratios.

Wiki

Mount Sinabung

Mount Sinabung (Indonesian: Gunung Sinabung) is a Pleistocene-to-Holocene stratovolcano of andesite and dacite in the Karo plateau of Karo Regency, North Sumatra, Indonesia. Many lava flows are on its flanks and the last known eruption had occurred in the year 1600. Solfataric activity (cracks where steam, gas, and lava are emitted) were last seen at the summit in 1912, but no other documented events had taken place until the eruption in the early hours of 29 August 2010.

Geology

Most of Indonesian volcanism stems from the Sunda Arc, created by the subduction of the Indo-Australian Plate under the Eurasian Plate. This arc is bounded on the north-northwest by the Andaman Islands, a chain of basaltic volcanoes, and on the east by the Banda Arc, also created by subduction.[3]

Sinabung is a long andesitic-dacitic stratovolcano with a total of four volcanic craters, only one being active

On 29 August 2010, the volcano experienced a minor eruption after several days of rumbling.[5] Ash spewed into the atmosphere up to 1.5 kilometres (0.93 mi) and lava was seen overflowing the crater.[5] The volcano had been inactive for centuries with the most recent eruption occurring in 1600.[5]

Mount Sinabung is classified as category “B”, which means it is not necessary for it to be monitored intensively. Other volcanoes, in category “A”, must be monitored frequently, the head of the National Volcanology Agency, named only as Surono, told Xinhua over phone from the province

Wiki

Igneous Rock

Igneous rock (derived from the Latin word igneus meaning of fire, from ignis meaning fire) is one of the three main rock types, the others being sedimentary and metamorphic rock. Igneous rock is formed through the cooling and solidification of magma or lava. Igneous rock may form with or without crystallization, either below the surface as intrusive (plutonic) rocks or on the surface as extrusive (volcanic) rocks. This magma can be derived from partial melts of pre-existing rocks in either a planet's mantle or crust. Typically, the melting is caused by one or more of three processes: an increase in temperature, a decrease in pressure, or a change in composition. Over 700 types of igneous rocks have been described, most of them having formed beneath the surface of Earth's crust. These have diverse properties, depending on their composition and how they were formed.

Geological significance

The upper 16 kilometres (10 mi) of Earth's crust is composed of approximately 95% igneous rocks with only a thin, widespread covering of sedimentary and metamorphic rocks.[1]

Igneous rocks are geologically important because:

their minerals and global chemistry give information about the composition of the mantle, from which some igneous rocks are extracted, and the temperature and pressure conditions that allowed this extraction, and/or of other pre-existing rock that melted;

their absolute ages can be obtained from various forms of radiometric dating and thus can be compared to adjacent geological strata, allowing a time sequence of events;

their features are usually characteristic of a specific tectonic environment, allowing tectonic reconstitutions (see plate tectonics);

in some special circumstances they host important mineral deposits (ores): for example, tungsten, tin, and uranium are commonly associated with granites and diorites, whereas ores of chromium and platinum are commonly associated with gabbros.

Intrusive igneous rocks

Close-up of granite (an intrusive igneous rock) exposed in Chennai, India.Intrusive igneous rocks are formed from magma that cools and solidifies within the crust of a planet. Surrounded by pre-existing rock (called country rock), the magma cools slowly, and as a result these rocks are coarse grained. The mineral grains in such rocks can generally be identified with the naked eye. Intrusive rocks can also be classified according to the shape and size of the intrusive body and its relation to the other formations into which it intrudes. Typical intrusive formations are batholiths, stocks, laccoliths, sills and dikes.

The central cores of major mountain ranges consist of intrusive igneous rocks, usually granite. When exposed by erosion, these cores (called batholiths) may occupy huge areas of the Earth's surface.

Coarse grained intrusive igneous rocks which form at depth within the crust are termed as abyssal; intrusive igneous rocks which form near the surface are termed hypabyssal.

Extrusive igneous rocks

Basalt (an extrusive igneous rock in this case); light coloured tracks show the direction of lava flow.Extrusive igneous rocks are formed at the crust's surface as a result of the partial melting of rocks within the mantle and crust. Extrusive Igneous rocks cool and solidify quicker than intrusive igneous rocks. Since the rocks cool very quickly they are fine grained.

The melted rock, with or without suspended crystals and gas bubbles, is called magma. Magma rises because it is less dense than the rock from which it was created. When it reaches the surface, magma extruded onto the surface either beneath water or air, is called lava. Eruptions of volcanoes into air are termed subaerial whereas those occurring underneath the ocean are termed submarine. Black smokers and mid-ocean ridge basalt are examples of submarine volcanic activity.

The volume of extrusive rock erupted annually by volcanoes varies with plate tectonic setting. Extrusive rock is produced in the following proportions:[2]

divergent boundary: 73%

convergent boundary (subduction zone): 15%

hotspot: 12%.

Magma which erupts from a volcano behaves according to its viscosity, determined by temperature, composition, and crystal content. High-temperature magma, most of which is basaltic in composition, behaves in a manner similar to thick oil and, as it cools, treacle. Long, thin basalt flows with pahoehoe surfaces are common. Intermediate composition magma such as andesite tends to form cinder cones of intermingled ash, tuff and lava, and may have viscosity similar to thick, cold molasses or even rubber when erupted. Felsic magma such as rhyolite is usually erupted at low temperature and is up to 10,000 times as viscous as basalt. Volcanoes with rhyolitic magma commonly erupt explosively, and rhyolitic lava flows typically are of limited extent and have steep margins, because the magma is so viscous.

Felsic and intermediate magmas that erupt often do so violently, with explosions driven by release of dissolved gases — typically water but also carbon dioxide. Explosively erupted pyroclastic material is called tephra and includes tuff, agglomerate and ignimbrite. Fine volcanic ash is also erupted and forms ash tuff deposits which can often cover vast areas.

Because lava cools and crystallizes rapidly, it is fine grained. If the cooling has been so rapid as to prevent the formation of even small crystals after extrusion, the resulting rock may be mostly glass (such as the rock obsidian). If the cooling of the lava happened slowly, the rocks would be coarse-grained.

Because the minerals are mostly fine-grained, it is much more difficult to distinguish between the different types of extrusive igneous rocks than between different types of intrusive igneous rocks. Generally, the mineral constituents of fine-grained extrusive igneous rocks can only be determined by examination of thin sections of the rock under a microscope, so only an approximate classification can usually be made in the field.

Wiki

Friday, July 16, 2010

Injection Moulder Provides 1600kN Clamping Force

This machine replaces the current Engel Victory and E-Victory 150 machines.

The Engel Victory 160 features a redesigned clamping cylinder, mould-fixing platen and C frame.

The use of the Flex-Links with force dividers allows for unbeatable clamping-unit quality.

They reduce the deflection of the moving mould-fixing platen to a minimum and ensure smoothly distributed force transmission to the mould across the whole mould-mounting surface.

All injection units are safeguarded by safety fences and safety gates.

Hydraulic injection units of size 750 are alternatively available as encapsulated types, without additional safety guarding.

The selection of electrical injection units has now been extended to include the 940 injection unit.

The Engel Victory 160's new ecodrive hydraulic drive system is now also available.

The new system has a fixed displacement pump and servomotor instead of the standard hydraulics and asynchronous motor used previously.

This means the machine's speed is directly linked to the drive speed.

The new servohydraulic ecodrive keeps the speed down.

In other words, the drive is only active during movements, with energy consumption close to zero when the machine is idle.

This makes it possible to reduce energy consumption by 70 per cent.

Tooling University

Telsonic Outlines Key to Ultrasonic Welding

Telsonic's Martin Frost discusses how thinking ahead can assist designers and integrators of ultrasonic welding to avoid pitfalls and make the most of the flexible and robust joining technology.

Reducing the time required to get a product to market is often a key element of today's manufacturing strategies.

Achieving these objectives involves taking a 'right first time' approach to minimise the lengthy development stages that can often be associated with a new product.

The increasing use of SLA and 3D printed models is a tremendous aid to visualising the shape, size, sections and features of the finished component, giving designers and production engineers a real insight into the production and performance criteria associated with the part.

Reducing the design and development time, however, means that the product designers have to make carefully considered and informed design inputs more quickly.

The most effective way of ensuring that the ultrasonic welding process will be consistent and predictable is wherever possible to design for assembly.

Focus should be made on the polymers that will be used along with preparation in part geometry and tolerances at the design stage.

This will simplify and enhance the reliability of the subsequent assembly and welding process.

Considering the potential requirement for specific weld features on the part is essential at the outset of the design process, as this in turn will have an impact on the component and the mould tool design.

These are important decisions, which will ultimately have an influence on the production process and part functionality and that should be based on sound advice sought through collaboration with the manufacturer of the ultrasonic technology.

Ultrasonic welding depends upon the response of the materials being joined.

Materials such as polystyrene, ABS and polycarbonate all respond well to ultrasonic energy.

Other materials, however, including polyethylene and tougher grades of nylon, are more difficult to weld with ultrasonics.

Reinforced materials, such as those with fillers, can have a positive or negative impact on the ultrasonic welding process dependant on the fill type and quantity.

The best results will always be obtained when the components to be welded are produced from the same material.

Dissimilar materials can be joined using ultrasonics providing they are in the same chemically compatible family and have similar melting points.

It is also possible to weld completely dissimilar materials using a joint design that will allow one material to be reformed and encapsulate the other mechanically, thus securing it in place.

Next to the selection of materials, part design and, in particular, joint design hold the key to creating a robust and repeatable weld.

Ultrasonic weld specialists and the internet offer plenty of valuable ground-rule information on joint designs - even Telsonic has its own design recommendation manual.

This information should be viewed as a guide only, as complex or delicate components require a sensitive approach to welding.

As an example, in these instances it is important to establish that the parts to be welded are actually capable of holding up to the forces applied by the process, however small.

Other dilemmas faced by the designer include ensuring that a joint can be achieved reliably - at speed and with a sufficiently wide process window.

Too narrow a process window will result in repeated machine setting and possibly higher reject rates.

The proposed production method - manual or automated - can also have an influence on both the product design and the welding process.

A further consideration may be the presence of other components, especially delicate parts or electronics that may be part of the assembly.

At this level it is important to have more than just a basic appreciation of the ultrasonic welding process.

Seeking advice from the supplier will ensure that the solution is based upon extensive experience of how best to apply the technology to the task in hand.

Examples where this type of collaboration has been successful include the creation of joint designs and part preparation where the ultrasonic energy is focused into the joint efficiently and the weld completed swiftly, without dissipation to other areas of the component or even a change in weld frequency.

These principles not only result in a successful weld but eliminate the risk of damage to any internal components.

Where there is to be more than one weld on a given part, both the component and joint design should be reviewed to ensure that energy from multiple weld sequences does not have an adverse effect on any of the previous weld points.

Good joint design in delicate components should be mindful of the amount of energy required to achieve the weld.

A typical example of the approach would be the use of an 'energy director' joint, as opposed to a 'shear joint'.

This design, when combined with application experience, still achieves both strength and a hermetic seal, but with a reduction in the energy required to make the weld of up to 40 per cent.

The use of location features, often surrounding the weld joint, make pre-welding assembly more robust by ensuring that the individual parts are positioned repeatably every time, with the added benefit of assisting the welding process.

The importance of dimensional tolerances and component stability must not be overlooked if the welding process is to remain consistent during production.

Parts can vary due to inconsistent moulding conditions or poor handling and storage post moulding, while parts are cooling.

Any resultant inconsistencies within the shape and size of the individual parts due to these factors or inappropriate component tolerances, will be reflected in the results achieved from the welding process.

With the design complete and component parts moulded, the physical parts should then be reviewed carefully at QC level to scrutinise the ultrasonic weld preparation features for size and accuracy.

The joint itself should be viewed and respected as a precise collapse of polymer melt, sized and positioned to provide a predictable and process controllable way to achieve fuse strength and not just a token sacrificial bead of plastic.

Having defined materials, joint design, tolerances and moulded a part fit for sustainable and quality production, it is essential to ensure that the welding process is not compromised by the use of inappropriate or underpowered equipment.

Attempting to use equipment that is incapable of generating the required amount of ultrasonic power or lacking the control functionality required for the task in hand, will undoubtedly result in continuous adjustments to pressure and amplitude, and ultimately guesswork in trying to make the application 'fit' the processing capabilities of the machine.

This is especially important for high-volume precision components and those used in medical devices or other safety-critical applications, where it is essential to produce parts to a consistent specification and quality.

In these instances it is essential to invest in a supplier specialist with design capability, laboratory development facilities, a broad range of machines and modules, together with the expertise to develop a robust production solution in partnership with the design house, integrator and manufacturer.

Tooling University

Purging Compound Reduces Extrusion Downtime

Package film extrusion plants practising frequent material changeovers, have been successful in using a chemical purging compound to improve quality and reduce downtime.

Package film extrusion plants have been successful in using SuperNova chemical purging compound from Novachem to improve quality and reduce downtime.

One operator found that frequent material changeovers, particularly when using Eval or Surlyn, can yield gels and specks in production output - even after running scrap for two or three hours, gels and speck were apparent.

This led to frequent unscheduled die teardowns - at least once a month - and hours of lost production time.

The Application Specialists at Novachem were able to create custom purging processes to help remove leftover production material that can degrade the machinery during transitions and startup.

After analyzing the plant's needs, a site-specific regimen of SuperNova chemical purging compound was recommended.

This usually involves use of the compound before every material transition, and following each teardown and cleaning.

After using a tailor-engineered application of SuperNova chemical purging compound as recommended by the application specialists at Novachem, changeovers yielded no more gels and little or no specking after shutdowns.

Based on the typical success of custom purging processes created by Novachem's application experts, plant productivity and worker efficiency have improved dramatically.

Some plants have saved up to 12h/month on their changeovers, and have been able to avoid up to three shifts a month on teardowns.

As a bonus, there is little or no product waste due to gels or specks.

Tooling University

Double-Strand Core Extrusion Reduces Costs

In extruding plastics profiles, double-strand extrusion produces two profiles simultaneously, reducing the capital investment and the required floor space for the extrusion line.

In profile manufacture, coextrusion can cut costs substantially, said KraussMaffei.

For example, producing a profile with a regrind core covered with virgin material in all visible areas sharply reduces material costs.

Schuco International has recently invested in a profile system using core extrusion technology.

Schuco International is a global player, developing and marketing complete systems using plastics, aluminium and steel.

One current project combines double-strand extrusion with core technology.

Double-strand extrusion produces two profiles simultaneously.

This reduces both the capital investment and the required floorspace for the extrusion line.

Schuco embarked on a cooperative project with Greiner Extrusion and KraussMaffei Berstorff to develop a double-strand extrusion system for producing its five-chamber main window profiles.

The big challenges were to design the die, to split up and manage the melt streams, and develop a cost-effective extruder concept.

The combined know-how of the three partners made it possible to meet these challenges in a remarkably short time.

* Pressure-optimized channel system - the material ratios in Schuco's main window profiles are around 60% virgin PVC and 40% regrind.

The new system uses two extruders, both of which supply both strands.

The melt streams are split via a pressure-optimized channel system so that they reach the dies in the required pattern.

The two extruders need to be positioned very close together in order to feed the channel system effectively.

The concept uses two separate KMD 90-36/P profile extruders from KraussMaffei Berstorff, each on its own base frame.

The main control cabinets are positioned at a distance from the extrusion line.

Two extra compact control units, positioned close to the extruder output zone, house the die control circuits and the operator panels.

Both operator panels (one for each extruder) are on the operator side.

Each extruder can be operated separately, or the two extruders can be operated in synchrony.

This gives Schuco maximum flexibility to respond to future requirements.

* About KraussMaffei - KraussMaffei is the only supplier worldwide of the three key machine technologies for the plastics and rubber compounding and processing industries.

The KraussMaffei brand stands for comprehensive solutions for injection and reaction moulding, while the KraussMaffei Berstorff brand covers the whole spectrum of extrusion systems, including complete extrusion lines.

KraussMaffei has a unique wealth of know-how across the whole range of processing methods.

As a technology partner, it links this know-how with innovative engineering to deliver application-specific and integrated solutions.

KraussMaffei operates a network of 70 subsidiaries and sales agencies close to customers worldwide.

Tooling University

Saturday, July 10, 2010

Brain Structure

Scientists have found that the size of different parts of people’s brains correspond to their personalities. For example, conscientious people tend to have a bigger lateral prefrontal cortex, a brain region involved in planning and controlling actions.

Psychologists commonly break down all personality traits into five factors: conscientiousness, extraversion, neuroticism, agreeableness, and openness/intellect. Researchers Colin DeYoung at the University of Minnesota and colleagues wanted to know if these factors correlated with the size of structures in the brain.

The scientists gave 116 volunteers a questionnaire to describe their personality, then gave them a brain imaging test that measured the relative size of different parts of the brain. Several links were found between the size of certain brain regions and personality. The research appears in the journal Psychological Science.

For example, “everybody, I think, has a common sense of what extroversion is – someone who is talkative, outgoing, brash,” said DeYoung. “They get more pleasure out of things like social interaction, amusement parks, or really just about anything, and they’re also more motivated to seek reward, which is part of why they’re more assertive.” That quest for reward is thought to be a leading factor in extroversion.

Earlier studies had found parts of the brain that are active in considering rewards. So DeYoung and his colleagues reasoned that those regions should be bigger in extroverts. Indeed, they found that one of those regions, the medial orbitofrontal cortex – just above and behind the eyes – was significantly larger in very extroverted study subjects.

The study found similar associations for conscientiousness, which is associated with planning; neuroticism, a tendency to experience negative emotions that is associated with sensitivity to threat and punishment; and agreeableness, which relates to parts of the brain that allow us to understand each other’s emotions, intentions, and mental states. Only openness/intellect didn’t associate clearly with any of the predicted brain structures, the researchers found.

“This starts to indicate that we can actually find the biological systems that are responsible for these patterns of complex behavior and experience that make people individuals,” said DeYoung. He points out, though, that this doesn’t mean your personality is fixed from birth; the brain grows and changes as it grows. Experiences change the brain as it develops, and those changes in the brain can change personality.

World Science

Thursday, July 1, 2010

Transformations of Energy

One form of energy can often be readily transformed into another with the help of a device- for instance, a battery, from chemical energy to electric energy; a dam: gravitational potential energy to kinetic energy of moving water (and the blades of a turbine) and ultimately to electric energy through an electric generator. Similarly, in the case of a chemical explosion, chemical potential energy is transformed to kinetic energy and thermal energy in a very short time. Yet another example is that of a pendulum. At its highest points the kinetic energy is zero and the gravitational potential energy is at maximum. At its lowest point the kinetic energy is at maximum and is equal to the decrease of potential energy. If one (unrealistically) assumes that there is no friction, the conversion of energy between these processes is perfect, and the pendulum will continue swinging forever

Energy gives rise to weight and is equivalent to matter and vice versa. The formula E = mc², derived by Albert Einstein (1905) quantifies the relationship between mass and rest energy within the concept of special relativity. In different theoretical frameworks, similar formulas were derived by J. J. Thomson (1881), Henri Poincaré (1900), Friedrich Hasenöhrl (1904) and others (see Mass-energy equivalence#History for further information). Since c2 is extremely large relative to ordinary human scales, the conversion of ordinary amount of mass (say, 1 kg) to other forms of energy can liberate tremendous amounts of energy (~9x1016 joules), as can be seen in nuclear reactors and nuclear weapons. Conversely, the mass equivalent of a unit of energy is minuscule, which is why a loss of energy from most systems is difficult to measure by weight, unless the energy loss is very large. Examples of energy transformation into matter (particles) are found in high energy nuclear physics.

In nature, transformations of energy can be fundamentally classed into two kinds: those that are thermodynamically reversible, and those that are thermodynamically irreversible. A reversible process in thermodynamics is one in which no energy is dissipated (spread) into empty energy states available in a volume, from which it cannot be recovered into more concentrated forms (fewer quantum states), without degradation of even more energy. A reversible process is one in which this sort of dissipation does not happen. For example, conversion of energy from one type of potential field to another, is reversible, as in the pendulum system described above. In processes where heat is generated, quantum states of lower energy, present as possible exitations in fields between atoms, act as a reservoir for part of the energy, from which it cannot be recovered, in order to be converted with 100% efficiency into other forms of energy. In this case, the energy must partly stay as heat, and cannot be completely recovered as usable energy, except at the price of an increase in some other kind of heat-like increase in disorder in quantum states, in the universe (such as an expansion of matter, or a randomization in a crystal).

As the universe evolves in time, more and more of its energy becomes trapped in irreversible states (i.e., as heat or other kinds of increases in disorder). This has been referred to as the inevitable thermodynamic heat death of the universe. In this heat death the energy of the universe does not change, but the fraction of energy which is available to do produce work through a heat engine, or be transformed to other usable forms of energy (through the use of generators attached to heat engines), grows less and less

Wiki

Depleted Uranium

Depleted uranium (DU) is uranium primarily composed of the isotope uranium-238 (U-238). Natural uranium is about 99.27 percent U-238, 0.72 percent U-235, and 0.0055 percent U-234. U-235 is used for fission in nuclear reactors and nuclear weapons. Uranium is enriched in U-235 by separating the isotopes by mass. The byproduct of enrichment, called depleted uranium or DU, contains less than one third as much U-235 and U-234 as natural uranium. The external radiation dose from DU is about 60 percent of that from the same mass of natural uranium.

DU is also found in reprocessed spent nuclear reactor fuel, but that kind can be distinguished from DU produced as a byproduct of uranium enrichment by the presence of U-236.[3] In the past, DU has been called Q-metal, depletalloy, and D-38.

DU is useful because of its very high density of 19.1 g/cm3. Civilian uses include counterweights in aircraft, radiation shielding in medical radiation therapy and industrial radiography equipment, and containers used to transport radioactive materials. Military uses include defensive armor plating and armor-piercing projectiles.

The use of DU in munitions is controversial because of questions about potential long-term health effects.[4][5] Normal functioning of the kidney, brain, liver, heart, and numerous other systems can be affected by uranium exposure, because in addition to being weakly radioactive, uranium is a toxic metal.[6] It is weakly radioactive and remains so because of its long physical half-life (4.468 billion years for uranium-238), but has a considerably shorter biological half-life. The aerosol produced during impact and combustion of depleted uranium munitions can potentially contaminate wide areas around the impact sites or can be inhaled by civilians and military personnel.[7] During a three week period of conflict in 2003 in Iraq, 1,000 to 2,000 tonnes of DU munitions were used, mostly in cities.[8]

The actual acute and chronic toxicity of DU is also a point of medical controversy. Multiple studies using cultured cells and laboratory rodents suggest the possibility of leukemogenic, genetic, reproductive, and neurological effects from chronic exposure.[4] A 2005 epidemiology review concluded: "In aggregate the human epidemiological evidence is consistent with increased risk of birth defects in offspring of persons exposed to DU."[9] The World Health Organization states that no consistent risk of reproductive, developmental, or carcinogenic effects have been reported in humans.[10][11] However, the objectivity of this report has been called into question.

Wiki

Wednesday, June 23, 2010

Kinetic Energy

The kinetic energy of an object is the extra energy which it possesses due to its motion. It is defined as the work needed to accelerate a body of a given mass from rest to its current velocity. Having gained this energy during its acceleration, the body maintains this kinetic energy unless its speed changes. Negative work of the same magnitude would be required to return the body to a state of rest from that velocity.

The kinetic energy of a single object is completely frame-dependent (relative): it can take any non-negative value, by choosing a suitable inertial frame of reference. For example, a bullet racing by a non-moving observer has kinetic energy in the reference frame of this observer, but the same bullet has zero kinetic energy in the reference frame which moves with the bullet. By contrast, the total kinetic energy of a system of objects is not completely removable by a suitable choice of the inertial reference frame, unless all the objects have the same velocity. In any other case the total kinetic energy is at least equal to a non-zero minimum which is independent of the inertial reference system. This kinetic energy (if present) contributes to the system's invariant mass, which is seen as the same value in all reference frames, and by all observers.

The kinetic energy of an object of mass m traveling at a speed v is mv2/2, provided v is much less than the speed of light

History and etymology